Your initial reaction to this is probably one of two things:

- Wow – Amazing! What a breakthrough

- Well this seems like a potential privacy issue further down the line

How have Wi-Fi systems been used to see through walls?

Researchers from UC Santa Barbara developed this system where the edges of solid objects on one side of a solid barrier ie. a wall, can be traced from the other side using WI-Fi signals. This includes letters of the alphabet.

Imaging the letters individually, in one experiment this technology was used to work out the word ‘believe’ from the other side of a wall, deciphering the letters one by one.

They did this using 3 standard Wi-Fi transmitters. Over a particular area, these Wi-Fi transmitters sent wireless waves. The imaging was based on measuring the signal power received by a Wi-Fi receiver grid via receivers mounted on a movable vehicle.

3 transmitters you say – Would that not be triangulation? One of the researchers on this project states that triangulation is not being used. Instead, each transmitter is merely providing a different viewpoint, illuminating the area from different angles. This approach helps to reduce the chance that one of the edges they are detecting will not fall into a ‘blind region.’ To leave a signature on the receiver grid, they need to be ‘seen’.

The key to the success of this tech system using Wi-Fi signals to see through walls is their edge-based approach. Triangulation in this instance would not perform well.

How can objects be tracked using Wi-Fi signals?

This tech system is based on the principle of Keller’s Geometric Theory of Diffraction (aka The Keller Cone).

Keller introduced his Geometrical Theory of Diffraction (GTD) in the 1950s. The development of this theory was revolutionary, as it explained the phenomena of wave diffraction entirely in terms of rays for the first time, via a systematic generalisation of Fermat’s principle. In simple terms, it explains the process of a wave bending around an object (or obstacle) and how it spreads out across a particular space.

Using the principle of this theory, the system can interpret what could be on the other side of a wall.

Why is seeing through walls with Wi-Fi something new?

Scientists have actually been attempting to do this for a while, years in fact. This particular team at UC Santa Barbara have been working on this since 2009. This latest engineering breakthrough is significant because it was previously thought that anything as complex as reading the English alphabet through walls using Wi-Fi signals would be too difficult due to the complexity of the lettering.

Indeed, using Wi-Fi for imaging something still (like letters) has been a challenge due to the lack of motion. However, they seem to have overcome this issue by tracing the edges of the objects.

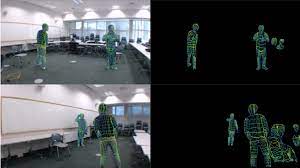

Back in July, we saw a similar tech engineered by researchers at Carnegie Mellon University where they tracked a 3D shape as well as human bodies moving in a room using Wi-Fi signals.

Seeing Through Walls With Wi-Fi – What will it be used for?

Assuming that this tech system matures and is put to wider use, we might find it useful for a few different applications:

- Crowd analytics

- Identifying individuals

- Boosting smart spaces

Is the resolution high enough?

Resolution on imagery can be improved by increasing the signals to higher frequencies. However, this would also increase the propagation loss so there would be a trade-off on energy.

This is likely where future experimentation will lie, as researchers and scientists look to explore this energy trade-off as frequencies and propagation loss increase.

Is it just edges of objects that can be detected? If this tech system of looking through walls with Wi-Fi signals is going to be widely adopted then depth sensing would also be useful. This can be achieved by using a wideband signal for transmission.

The experiment spoken about in this article was designed to showcase the performance of everyday radio signals, using 802.11n Wi-Fi which is narrowband. However, the framework could easily be expanded to utilise wideband signals too.

What challenges could ‘seeing through walls’ with Wi-Fi face?

As we stated at the start of this article, some potential issues that could arise from using Wi-Fi signals to see through walls relate to privacy and security.

Thus, this could have an effect on how successful this new tech system could be.

Could cyber criminals use this technology in part of an attack? Could law enforcement officials abuse this technology in some contexts?

We wrote on this topic of Wi-Fi seeing through walls back in January, about a similar piece of research that used ‘dense pose’ from Wi-Fi, using Wi-Fi signals as a human sensor and a way to map the position of human bodies using AI. You can read it here.

What are your thoughts on this – We’d love to know! Which camp do you sit in – Amazing Breakthrough or Privacy Nightmare? Get in touch on Facebook, X or Instagram.