American researchers based in Pennsylvania have shown a way to map the position of human bodies using AI (Artificial Intelligence) and Machine Learning with a deep neural network alongside Wi-Fi signals.

By analysing the phase and amplitude of Wi-Fi signals, Carnegie Mellon University researchers can see where people are, even through walls!

Over the last few years, researchers and scientists have done much work in this area. They’ve been looking at ‘human pose estimation’ which is identifying the joints in the human body and using sensors to work out body position and movement. To experiment with doing this, they’ve looked at:

- RGB cameras (used to deliver coloured images of people and objects by capturing light in red, green, and blue wavelengths)

- LiDAR (a Light Detection and Ranging system which works on the principle of radar, but uses light from a laser)

- Radar (a radiolocation system that uses radio waves to determine the distance, angle, and radial velocity of objects relative to a site)

Why is this useful? Using a type of sensor to detect body position and movement could be used for video gaming, healthcare, AR (Augmented Reality), sports and more.

The problem is that to do this with imagery (i.e. cameras) can be tricky due to being affected negatively by things like lighting or things obscuring the view.

And to use radar or LiDAR is not only expensive but requires a lot of power.

Enter, Wi-Fi.

Using Wi-Fi Signals as a Human Sensor

The team at CMU in Pennsylvania decided to look into using standard Wi-Fi antennas alongside predictive deep learning architecture in order to detect body position.

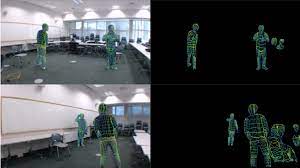

How does it work? Using a deep neural network, the phase and amplitude of Wi-Fi signals are mapped out to UV coordinates within 24 regions of the human body.

Their study revealed that using their model with Wi-Fi signals as the only input, they can estimate the dense pose of multiple subjects. The performance of this method was comparable to other image-based approaches.

As we said above, other methods use a lot of power and are also expensive. This Wi-Fi method that the ‘DensePose from Wi-Fi’ paper outlines, offers a lower cost alternative that is more widely accessible. It also says that it allows for privacy-preserving algorithms, which means that for human sensing it is less invasive than using Radar or LiDAR tech in non-public areas.

Although, not much of all this research sounds particularly preserving of privacy does it!

Has Wi-Fi Been Used as a Human Sensor Before?

Whilst the premise of monitoring people in a room using Wi-Fi isn’t a new one, the actual data previously collected wasn’t very clear, with trouble actually visualising what a person was doing within that room.

The difference with this new research from CMU is that it is using DeepPose and machine learning technology to not only estimate what the target person is doing, but also clearly make it visual.

As we said earlier, it’s also more accessible. The model they used needed just 2 wireless routers, each with 3 antennas and worked via the usual 2.4GHz band.

All you would need to do is put each router and antennas at either side of the target, and then gather the data by having full control of both units.

Whilst it’s more straightforward than Radar or LiDAR, there are still a couple of flaws. The range is limited by the weakness of Wi-Fi signals, and the accuracy could still be an issue too.

‘DensePose From Wi-Fi’ Paper Summary

The main things to take from this recent research are:

- Wi-Fi signals make it possible to identify dense human body poses by using deep learning architectures

- The public training data in the field of Wi-Fi based perception limits the performance of this current model, especially with different layouts

- The system has some difficulty identifying and representing body poses that are less common, and also struggles if there are 3 or more people concurrently

- Future research will aim to look at collecting multi-layout data as well as utilising a bigger data set in order to predicting 3D body shapes using Wi-Fi signals and correctly interpret data

The researchers believe that this Wi-Fi signal model could result in cheap human sensor monitoring as an alternative to RGB cameras and LiDARs.

If you want to read more, you can access the ‘DensePose From Wi-Fi’ paper here.

Here at Geekabit we’re interested to see what comes up when this is peer reviewed. What are your thoughts on using Wi-Fi signals to map and visualise people inside rooms?